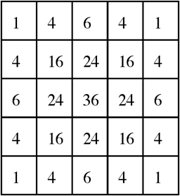

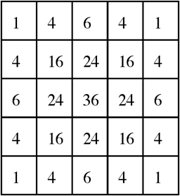

/256

/256Note: I have problems deciding on whether I should describe the

problem in CUDA terms or OpenCL terms. The mix below can be a bit

confusing, I guess. Anyway, "blocks" =

"work groups", "thread" = "work item".

It can hardly be stressed enough how important it is to utilize local (shared) memory when doing GPU computing. Local (shared) memory allows us to re-arrange memory before writing, to take advantage of coalescing, and to re-use data to reduce the total number of global memory accesses.

In this exercise, you will start from a working OpenCL program which

applies a linear filter to an image. The

original image is shown to the left, and the filtered image to the

right.

Your task is to accelerate this operation by preloading image data into local (shared) memory. You will have to split the operation to a number of work groups (blocks) and only read the part of the image that is relevant for your computation.

It can be noted that there are several ways to do image filtering that we do not take into account here. First of all, we can utilize separable filters. We may also use texture memory in order to take advantage of cache. Neither is demanded here. The focus here is memory addressing and local (shared) memory.

You need to use __local for declaring shared (local) memory, e.g. "__local unsigned char[64];" for allocating a 64-byte array.

After loading data to shared memory, before processing, you should use barrier(CLK_LOCAL_MEM_FENCE) or barrier(CLK_GLOBAL_MEM_FENCE) to synchronize. Beware that a global fence can be slow when you only need a local one!

Lab files:

lab6beta.tar.gz (old version, should be obsolete)

filter.c/filter.cl is a naive OpenCL implementation which is not

using local memory. ppmread.c and ppmread.h read and write PPM files (a

very

simple image format). CLutilities help you loading files and displaying

errors.

NOTE: We don't expect you to make a perfectly optimized solution,

but your solution should to a reasonable extent follow the guidelines

for a good OpenCL kernel.

You only need to support one filter size, but I recommend that you

support a variable size. 5x5, that is KERNELSIZE = 2,

the pre-set, might not be enough for a significant difference due to

the startup time of OpenCL. I got more significant differences with

larger size with my solution. With 7x7, I got a fairly consistent 3x

speedup (in Southfork) over the naive kernel.

The first target (above) is to reduce global memory access, but have you thought about coalescing, control divergence and occupancy? Be prepared for additional questions on these subjects.

NOTE: The first run of a modified kernel tends to take more time

than the following ones. Run more than once to get an accurate number.

QUESTION: How much data did you put in local (shared memory?

QUESTION: How much data does each thread copy to local memory?

QUESTION: How did you handle the necessary overlap between the work groups?

QUESTION: If we would like to increase the block size, about how big work groups would be safe to use in this case? Why?

QUESTION: How much speedup did you get over the naive version? /256

/256QUESTION: Were there any particular problems in adding this

feature?